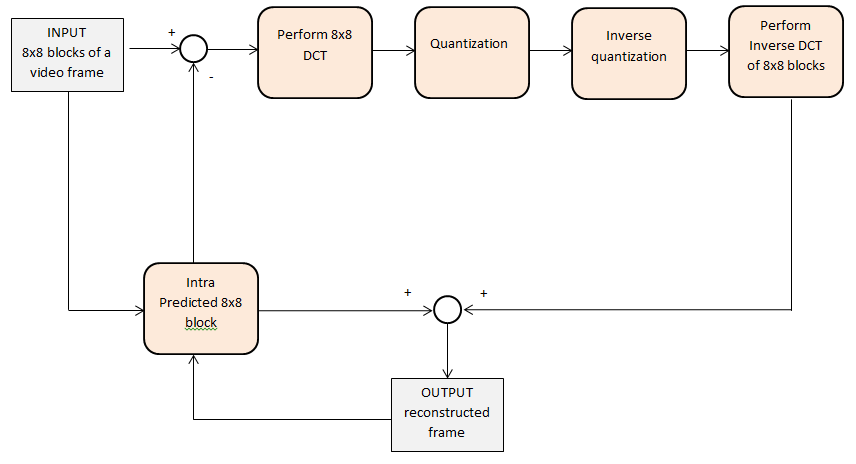

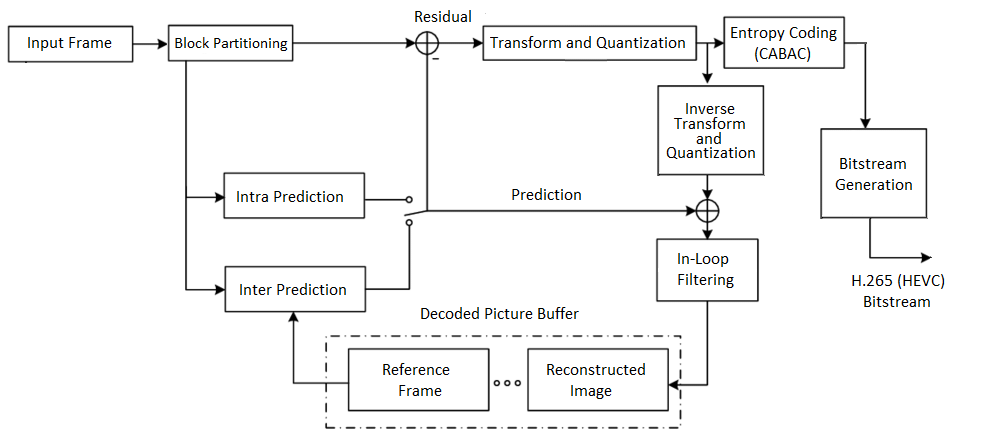

Alright, so I spent some time wrestling with inter prediction lately. You know, the whole deal where you try and guess parts of a video frame based on other frames. The idea is simple enough – find where a block of pixels moved from, just point to it, saves space. But actually getting it working smoothly? That’s another story.

Getting Started – The Setup

First thing I did was dig up the codebase I was supposed to integrate this into. It was a bit of a mess, honestly. Different pieces bolted together over time. My initial task was just to get any kind of inter prediction running. I grabbed some reference functions, kind of like examples, to see the basic flow. Plugged them in. Ran a test sequence.

Predictably, the output looked awful. Blocks all over the place, motion vectors pointing who knows where. It technically “ran”, but the compression was worse than just coding the frame directly, which defeats the whole purpose. So, step one: make it not garbage.

Digging In – Making it Work

The main chunk of work was getting the motion estimation part right. That’s the bit that actually searches around in the reference frame to find a good match for the current block. I started with a really simple search pattern, just checking a small square area. It was fast, but the matches were often bad.

Then I tried expanding the search area. Better matches, sure, but man, it slowed things down to a crawl. Couldn’t have that. It was a balancing act. What really helped was looking at how the motion vectors themselves were being predicted. Instead of starting the search from scratch every time, you can make a guess based on neighboring blocks. Getting that initial guess closer to the real spot made a huge difference.

I spent a lot of time just tweaking things:

- Search range: How far out should I look for a match?

- Search pattern: Checking every single spot? Or using smarter patterns like diamonds or hexagons?

- Block sizes: Should I try to predict big blocks? Small blocks? Both?

- Reference frames: Should I only look at the previous frame, or maybe frames further back?

Debugging this stuff is kind of annoying. You’re not looking for crashes, usually. You’re looking at slightly wrong pixel values, or motion vectors that are just a bit off. I ended up generating difference pictures – subtracting the predicted block from the actual block. When you see clear shapes or edges in the difference picture, you know your prediction is missing something. Lots of trial and error there.

The Result – Did it Fly?

Eventually, I got it to a pretty decent state. The motion vectors looked sensible, pointing generally where things were moving. The difference images were mostly gray mush, which is good – means the prediction was close. Most importantly, when I plugged it back into the main encoder, the file sizes for test videos actually went down. Noticeably down.

It wasn’t perfect, still some weirdness in fast-moving scenes, but it was a solid improvement. It felt good to finally see it contributing, making things smaller like it’s supposed to. It was a bit of a slog, messing with all those parameters and staring at blocky video, but we got there in the end.