Alright folks, let me walk you through this little project I cooked up – trying to predict Holger Rune’s matches. Sounds ambitious, right? Well, it was, but I learned a bunch along the way.

It all started with me being a tennis nut and thinking, “Hey, I wonder if I can actually predict who’s gonna win?” Rune’s got that fire, you know? So, I figured, why not him?

Step 1: Data, Data, Data!

- First, I went hunting for data. I scraped match results, player stats – everything I could get my hands on. Think ATP rankings, win-loss records, surface preferences, you name it. Used some basic Python scripts and Beautiful Soup to grab it all. It was messy, believe me. Dates all over the place, inconsistent naming… a real headache.

Step 2: Cleaning Up the Mess

- This was the grunt work. Had to clean up all that data. Standardized the dates, made sure player names matched up, handled missing values (there were tons!). Pandas in Python became my best friend here. Filling missing data with averages, dropping rows that were too incomplete – you know, the usual.

Step 3: Feature Engineering – Making Sense of It All

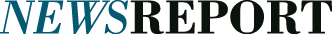

- Okay, now for the fun part. I started creating features – things that I thought might influence a match. Difference in rankings, recent form (wins in the last 10 matches), head-to-head records. Even tried to factor in surface type (clay, hard, grass) by giving players a “surface preference” score based on their past performance on each. It was a lot of trial and error.

Step 4: Picking the Right Model

- I played around with a few different machine learning models. Started with logistic regression (simple and easy to understand), then moved on to random forests and even a gradient boosting machine (GBM) to see if I could squeeze out some extra accuracy. Scikit-learn in Python made this relatively painless.

Step 5: Training and Testing – Does It Actually Work?

- Split my data into training and testing sets. Trained the models on the training data and then tested them on the testing data to see how well they predicted the outcomes of matches they hadn’t seen before. I used metrics like accuracy and AUC (area under the ROC curve) to evaluate performance.

Step 6: Results – The Moment of Truth

- Alright, so the results weren’t mind-blowing. Logistic regression gave me about 65% accuracy. Random forest was a bit better, maybe 68%. The GBM was the best, pushing it up to around 70%. Not bad, but not good enough to bet the house on.

Step 7: What I Learned (and What I’d Do Differently)

- Data is King (and Queen): The more data, the better. I wish I had access to things like unforced error rates, fatigue levels, and even things like crowd sentiment.

- Feature Engineering is an Art: This is where you can really make a difference. I think I could have come up with some more creative and informative features. Maybe factor in opponent’s strengths and weaknesses.

- Model Selection Matters, But Not as Much as You Think: The model itself is important, but good data and good features will take you further.

- Tennis is Unpredictable: At the end of the day, tennis is a sport with a lot of variance. Upsets happen. That’s part of what makes it exciting!

So, there you have it. My attempt to predict Holger Rune’s matches. It wasn’t perfect, but it was a fun learning experience. Maybe I’ll try again next season with some improvements!