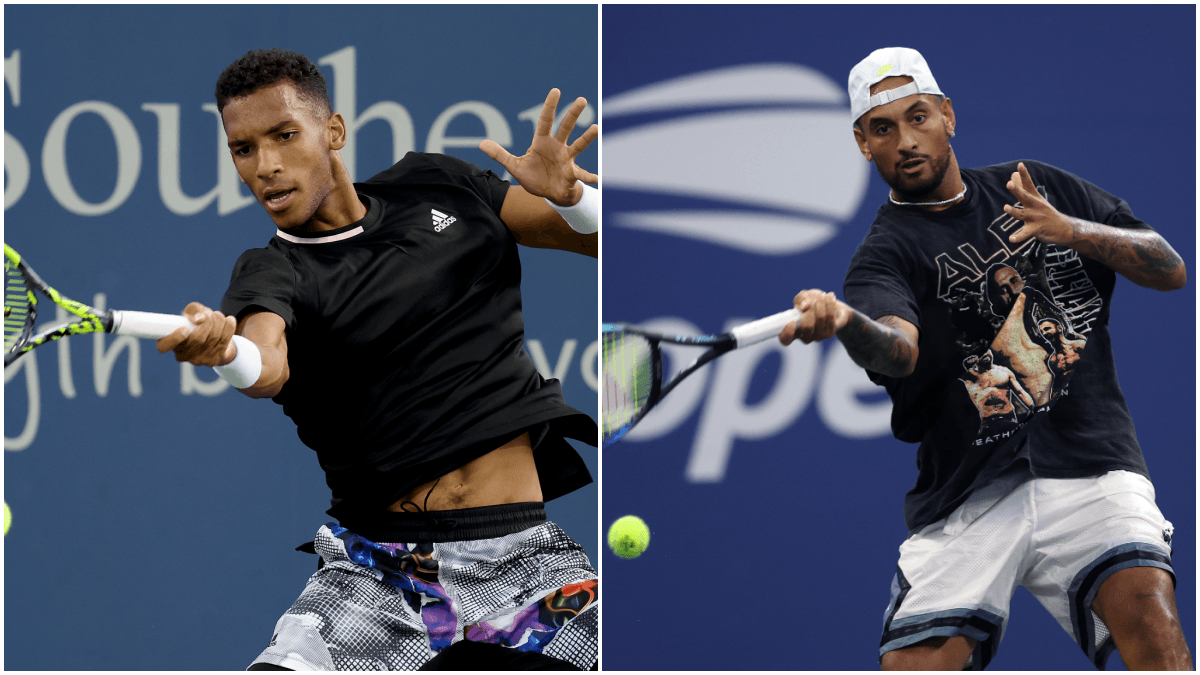

Okay, so today I’m gonna walk you through my attempt at building a prediction model for Felix Auger-Aliassime’s tennis matches. It was a bit of a rollercoaster, lemme tell ya!

First off, I started by gathering data. I’m talking about match results, player stats – everything I could get my hands on. I scraped some websites, downloaded a bunch of CSV files. It was messy, but hey, gotta start somewhere, right?

Next up was cleaning. Oh man, data cleaning. This took forever. Missing values everywhere, inconsistencies in player names, you name it. I used Python with Pandas for this. Filled in the missing bits with averages where I could, standardized names… basically, made the data usable.

Then came feature engineering. I figured raw stats weren’t enough. I needed to create some new features that might be predictive. Things like win percentage on different court surfaces, head-to-head records against specific opponents, recent form (wins in the last X matches) – all that jazz. More Python and Pandas, naturally.

Okay, now for the fun part: the model. I decided to try a few different machine learning models. Started with a simple logistic regression, then moved on to a random forest, and even dabbled a little with a gradient boosting machine. Used Scikit-learn for all of this. Pretty straightforward.

I split the data into training and testing sets. Trained the models on the training data, then evaluated them on the testing data. Looked at metrics like accuracy, precision, recall, F1-score – the whole shebang. The initial results? Not great. Like, barely better than guessing.

So, I started tweaking things. Adjusted the hyperparameters of the models, tried different feature combinations, even experimented with different ways of weighting the data. Still, nothing spectacular.

Here’s where I think I messed up. I didn’t have enough data. Tennis match data, especially for one specific player, is kinda scarce. Plus, there’s so much randomness in tennis. A slight injury, a bad call, a lucky shot – any of those can swing a match. It’s tough to capture all that in a model.

In the end, I didn’t get a super accurate prediction model. But I learned a ton! I got better at data cleaning, feature engineering, and model building. And I realized that some things are just inherently hard to predict. Still, I had fun giving it a shot. Maybe I’ll try again sometime with more data and a different approach.